We all knew the rumors of a 2048x1536 high resolution screen of the upcoming iPad 2 which floated on the web the last few days, now, it makes sense to ask a question, what graphics processing unit (GPU) can adequately power iPad 2?, according to AppleInsider sources that iPad 2 and iPhone 5 will use a dual-core SGX543 GPU from "Imagination Technologies"

We all knew the rumors of a 2048x1536 high resoultion screen of the iPad which floated on the web the last few days, now, it makes sense to ask a question, what graphics processing unit (GPU) can adequately power iPad 2?, according to AppleInsider sources that iPad 2 and iPhone 5 will use a dual-core SGX543 GPU from "Imagination Technologies"[via MacRumors]

In other words, the SGX543 can have any number of cores from two to sixteen with no change in the driver software or the application. All that complex data/pipeline/thread management is done in hardware. No muss, no fuss.

What is GPU Computing?

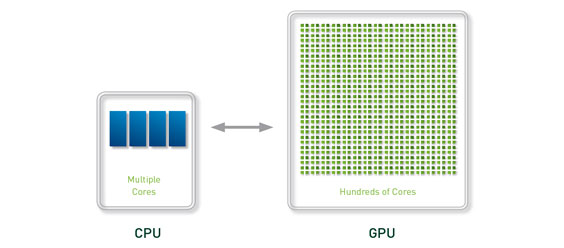

GPU computing or GPGPU is the use of a GPU (graphics processing unit) to do general purpose scientific and engineering computing.

The model for GPU computing is to use a CPU and GPU together in a heterogeneous co-processing computing model. The sequential part of the application runs on the CPU and the computationally-intensive part is accelerated by the GPU. From the user’s perspective, the application just runs faster because it is using the high-performance of the GPU to boost performance.

The GPU has evolved over the years to have teraflops of floating point performance. NVIDIA revolutionized the GPGPU and accelerated computing world in 2006-2007 by introducing its new massively parallel architecture called “CUDA”. The CUDA architecture consists of 100s of processor cores that operate together to crunch through the data set in the application.

The success of GPGPUs in the past few years has been the ease of programming of the associated CUDA parallel programming model. In this programming model, the application developer modify their application to take the compute-intensive kernels and map them to the GPU. The rest of the application remains on the CPU. Mapping a function to the GPU involves rewriting the function to expose the parallelism in the function and adding “C” keywords to move data to and from the GPU. The developer is tasked with launching 10s of 1000s of threads simultaneously. The GPU hardware manages the threads and does thread scheduling.

The Tesla 20-series GPU is based on the “Fermi” architecture, which is the latest CUDA architecture. Fermi is optimized for scientific applications with key features such as 500+ gigaflops of IEEE standard double precision floating point hardware support, L1 and L2 caches, ECC memory error protection, local user-managed data caches in the form of shared memory dispersed throughout the GPU, coalesced memory accesses and so on.

"GPUs have evolved to the point where many real-world applications are easily implemented on them and run significantly faster than on multi-core systems. Future computing architectures will be hybrid systems with parallel-core GPUs working in tandem with multi-core CPUs."

Prof. Jack Dongarra

Director of the Innovative Computing Laboratory

The University of Tennessee

History of GPU Computing

Graphics chips started as fixed function graphics pipelines. Over the years, these graphics chips became increasingly programmable, which led NVIDIA to introduce the first GPU or Graphics Processing Unit. In the 1999-2000 timeframe, computer scientists in particular, along with researchers in fields such as medical imaging and electromagnetics started using GPUs for running general purpose computational applications. They found the excellent floating point performance in GPUs led to a huge performance boost for a range of scientific applications. This was the advent of the movement called GPGPU or General Purpose computing on GPUs.

The problem was that GPGPU required using graphics programming languages like OpenGL and Cg to program the GPU. Developers had to make their scientific applications look like graphics applications and map them into problems that drew triangles and polygons. This limited the accessibility of tremendous performance of GPUs for science.

NVIDIA realized the potential to bring this performance to the larger scientific community and decided to invest in modifying the GPU to make it fully programmable for scientific applications and added support for high-level languages like C, C++, and Fortran. This led to the CUDA architecture for the GPU.

CUDA Parallel Architecture and Programming Model

The CUDA parallel hardware architecture is accompanied by the CUDA parallel programming model that provides a set of abstractions that enable expressing fine-grained and coarse-grain data and task parallelism. The programmer can choose to express the parallelism in high-level languages such as C, C++, Fortran or driver APIs such as OpenCL™ and DirectX™-11 Compute.

NVIDIA today provides support for programming the GPU with C, C++, Fortran, OpenCL, and DirectCompute. A set of software development tools along with libraries and middleware are available to developers as shown in the figure above and linked from here. GPU to be programmed using C with a minimal set of keywords or extensions. Support for Fortran, OpenCL, et cetera will follow soon.

The CUDA parallel programming model guides programmers to partition the problem into coarse sub-problems that can be solved independently in parallel. Fine grain parallelism in the sub-problems is then expressed such that each sub-problem can be solved cooperatively in parallel.

The CUDA GPU architecture and the corresponding CUDA parallel computing model are now widely deployed with 1000s of applications and 1000s of published research papers.CUDA Zone lists many of these applications and papers.

OpenCL is a trademark of Apple Inc. used under license to the Khronos Group Inc.

DirectX is a registered trademark of Microsoft Corporation.

http://www.nvidia.com/object/GPU_Computing.html

No comments:

Post a Comment